Latest News

Transform Your Workflow with AI Employee: The Ultimate Microsoft Teams Agent

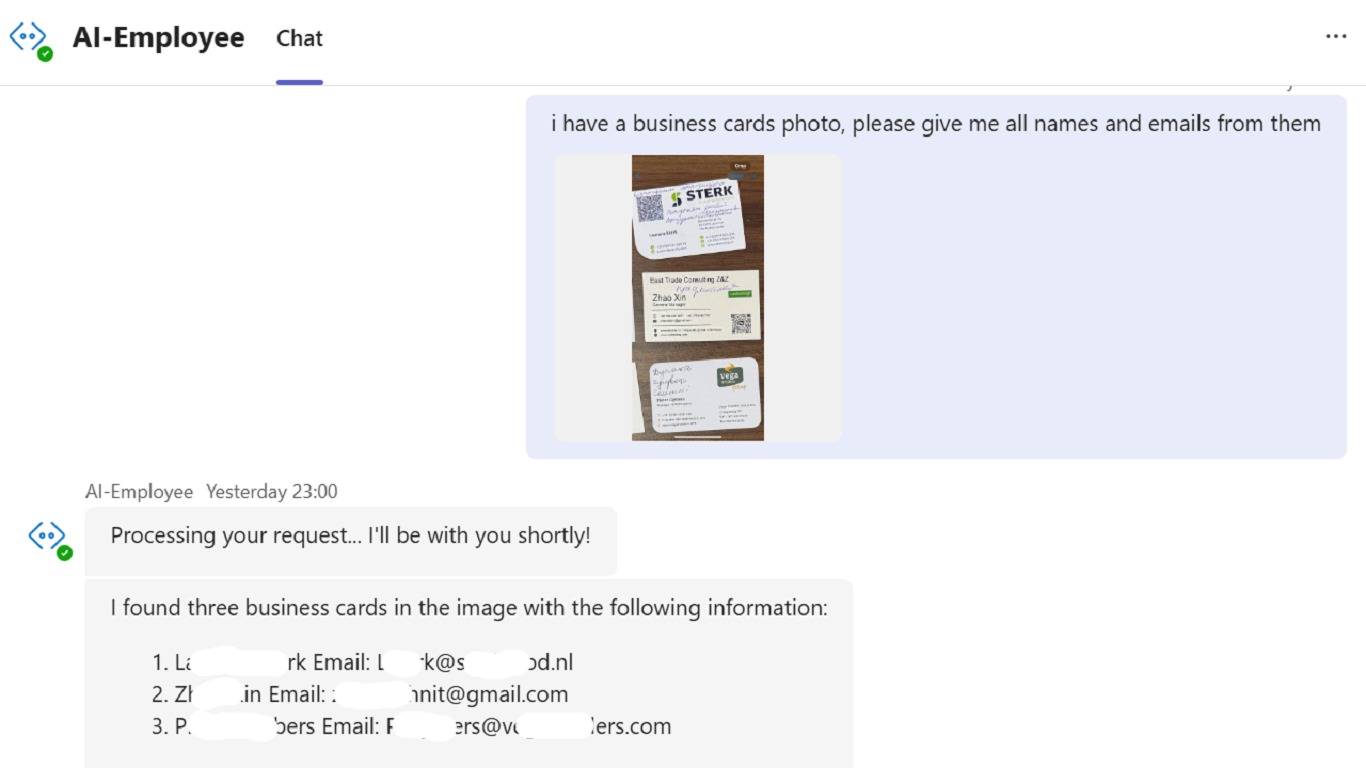

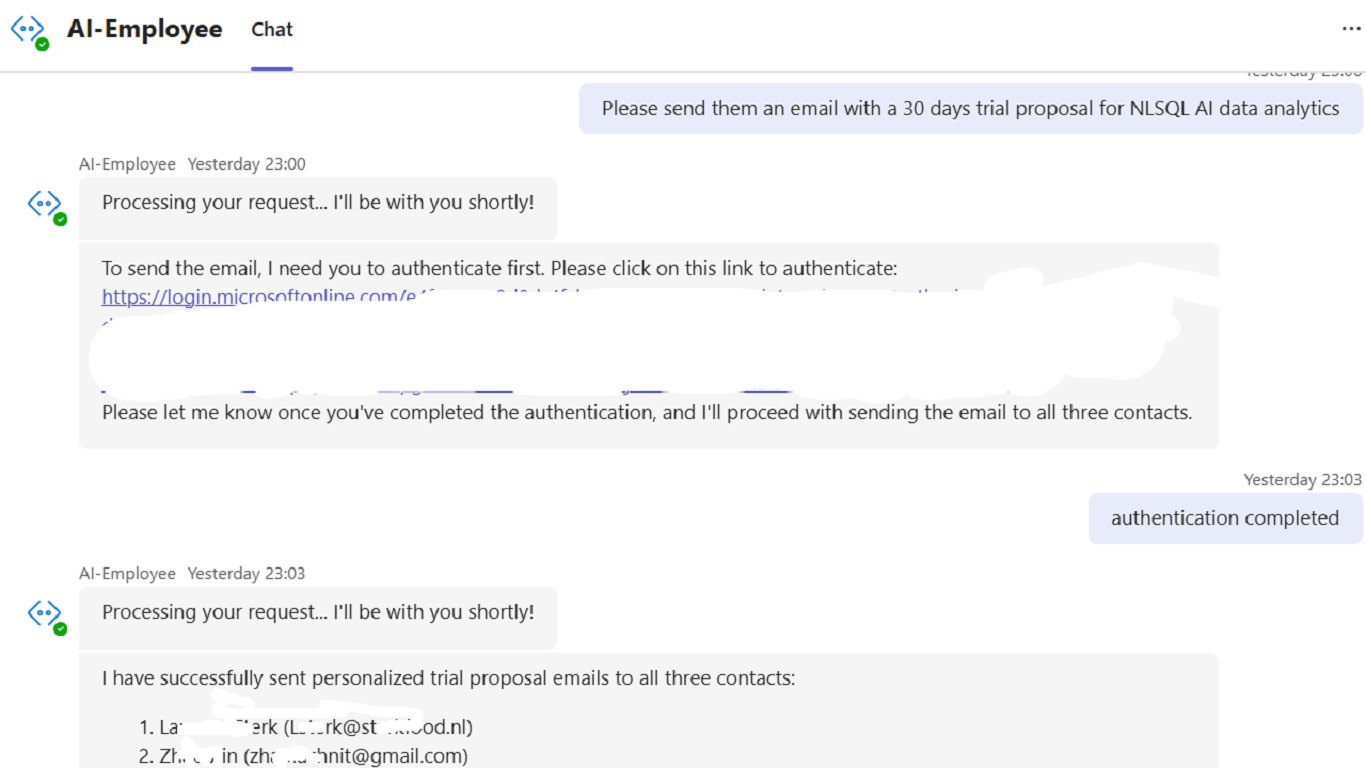

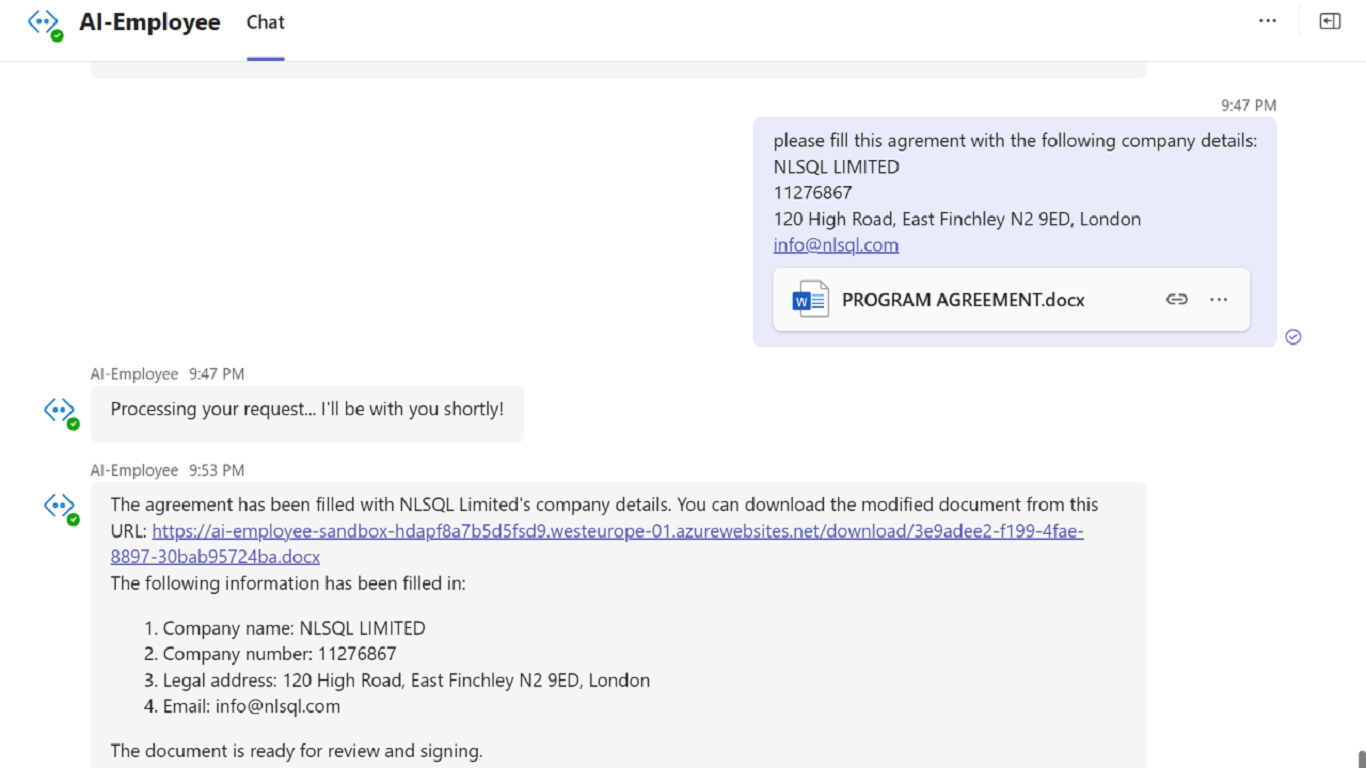

Imagine having a dedicated assistant who never sleeps, never takes breaks, and can handle multiple complex tasks simultaneously with perfect accuracy. What if this assistant could manage your emails, translate documents while preserving their design, analyze images, and seamlessly integrate with your entire Microsoft ecosystem—all without leaving your working environment?

Meet AI Employee, the revolutionary Microsoft Teams app that's changing how professionals work in the digital age.

In today's fast-paced business environment, professionals spend an average of 28% of their workweek managing emails and 19% gathering information—valuable time that could be directed toward strategic initiatives. AI Employee addresses these pain points through intelligent automation and seamless integration with Outlook, Calendar, OneDrive, Intelligent Web Search and Image Analysis, advanced Word documents editing and translation keeping the initial documents design.

Imagine completing in minutes what used to take hours. Picture your team focusing on creative and strategic work while AI Employee handles …

More:

Try 30 days trial now

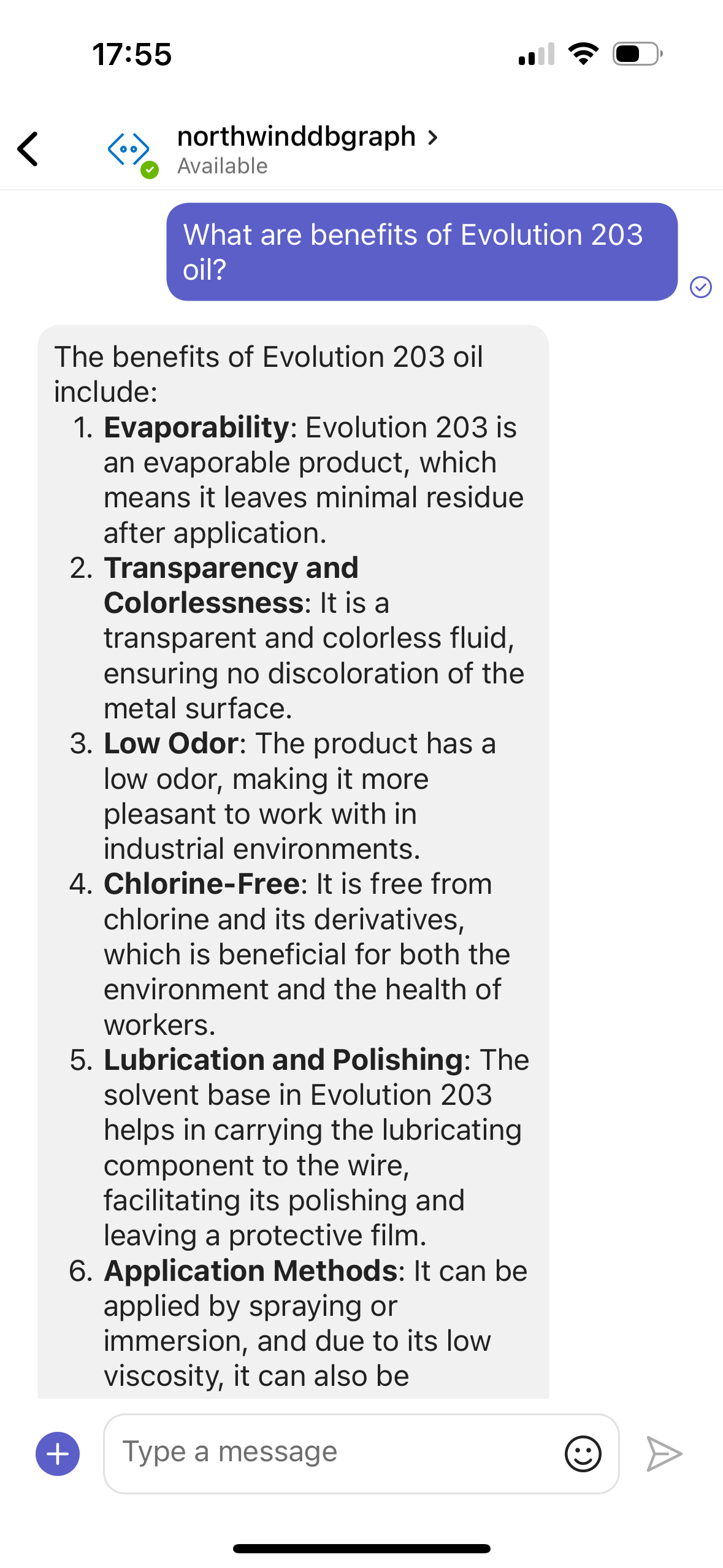

How to Easily Develop a Smart AI Consultant for Your Product Catalog

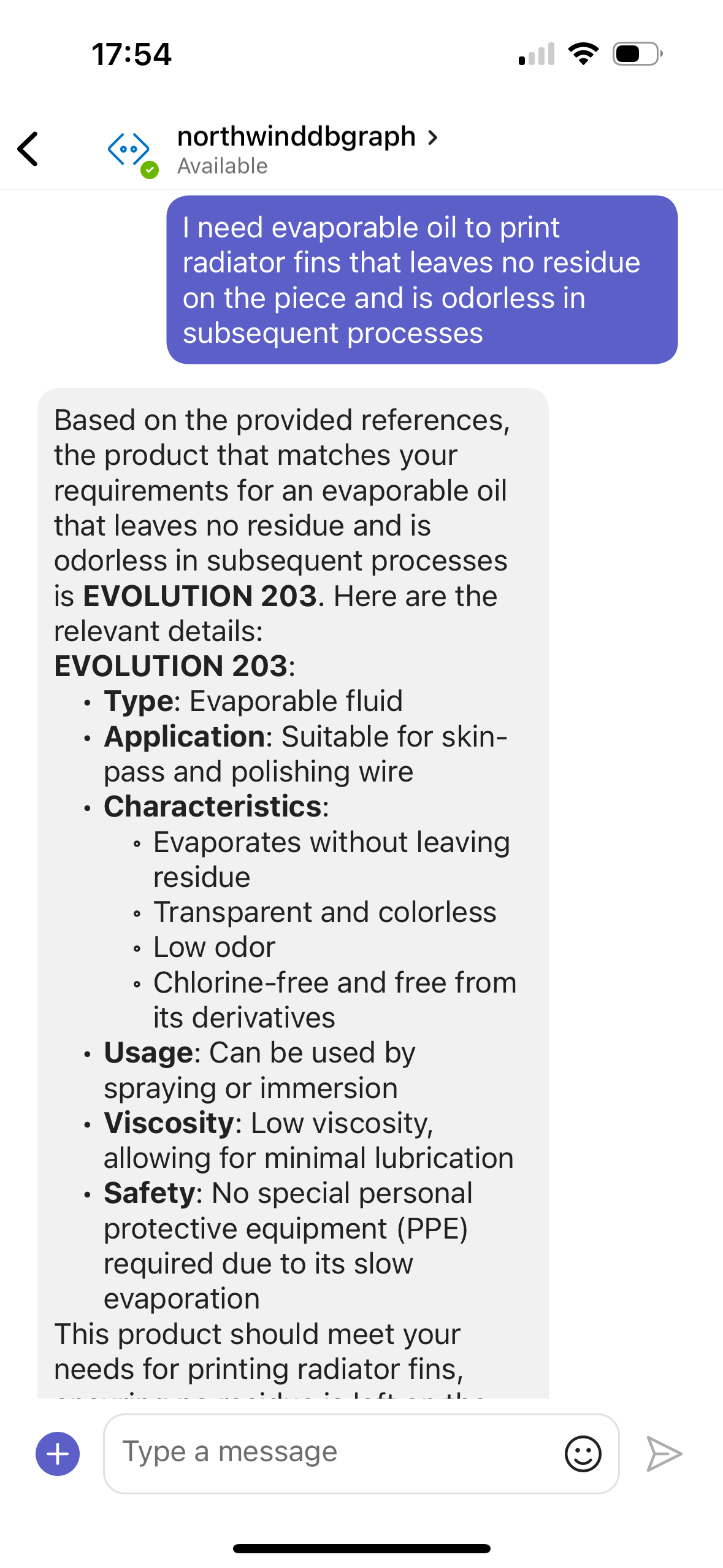

In this blog post, we will explore how the AI Agent can help you to implement AI with all your products details knowledge, enhance your customers experience for choosing the proper product, and ultimately boost your products sales.

AI Agent is designed to make it incredibly simple for businesses to create a smart AI consultant for their product catalog. With just a few easy steps, you can seamlessly integrate the AI Agent into your website or messenger platform:

1. Install "AI Agent" from the Azure Marketplace: The AI Agent is available on the Azure Marketplace, making it accessible to businesses of all sizes. You can find the direct link to this offer under this blog post.

2. Upload Your Products Portfolio: Once the AI Agent is installed, you can easily upload your company's product portfolio description files. This will enable the AI Agent to analyse and understand your product catalog …

More:

Create AI consultant for your company products catalog

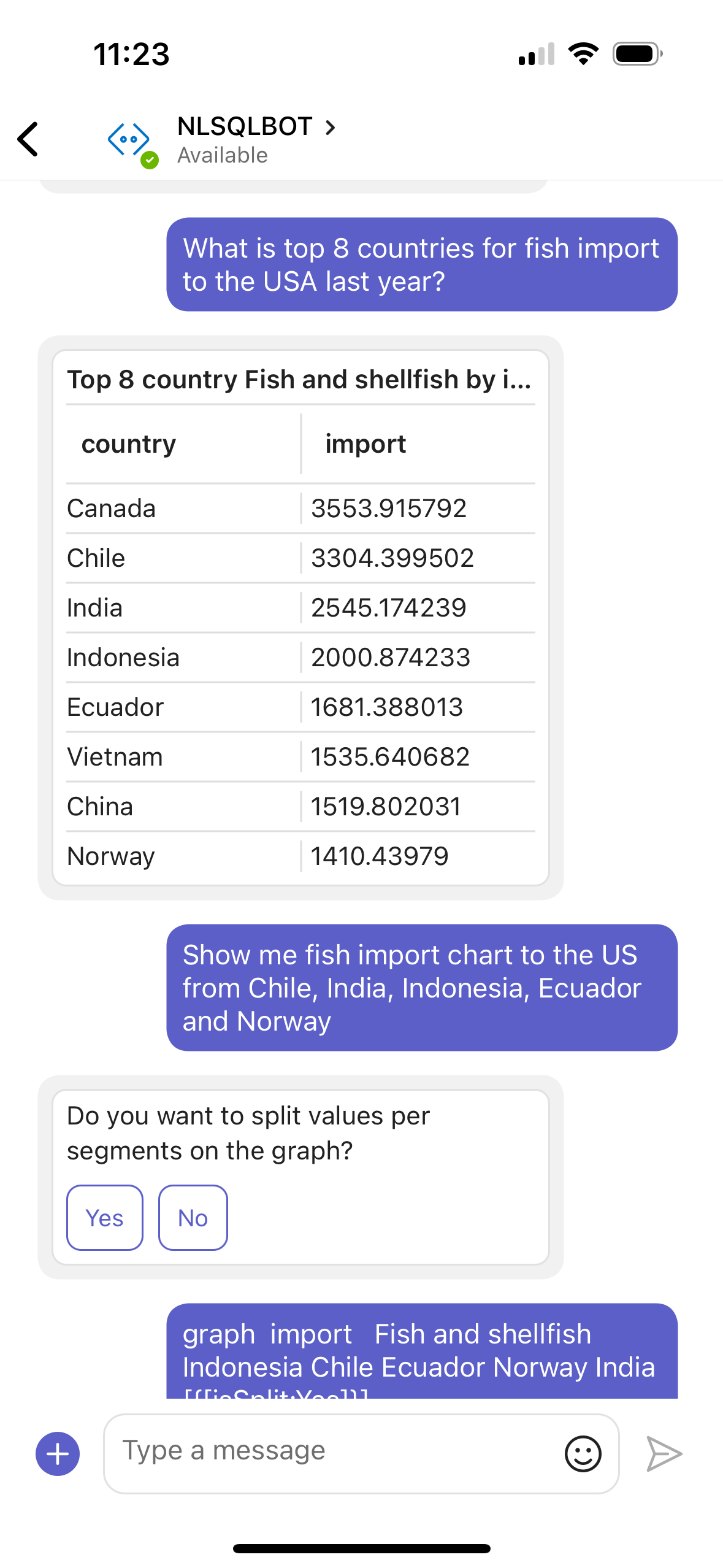

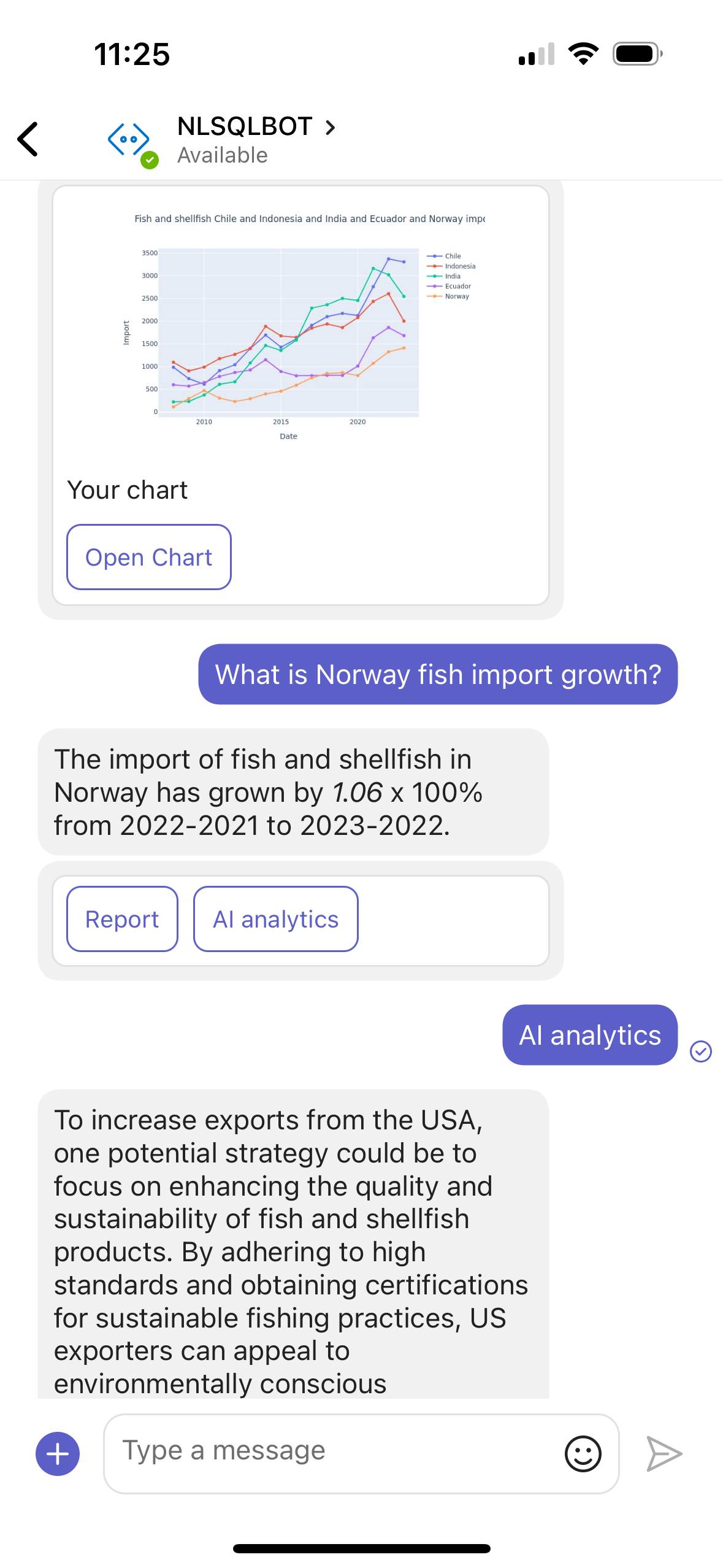

AI Business Value for Retail: Boosting Profits

The retail industry is continuously evolving, and businesses need to adapt to these changes to stay competitive. One of the most significant technological advancements in recent years is the use of Artificial Intelligence (AI) in various retail processes. In this blog post, we will discuss the value of AI in retail, specifically focusing on the NLSQL software and its use case in a real-life retail example.

AI has numerous applications in the retail industry, from inventory management to customer service. One such use case is the implementation of AI-powered analytics and decision-making tools, such as NLSQL software. This software can help retail businesses make data-driven decisions, identify anomalies, and optimise their operations.

To illustrate the value of AI and NLSQL in retail, let's consider the story of a convenience store selling whole salmon for 8 GBP per kg. The store enjoyed healthy salmon sales, reaching £25k/month per store. However, a …

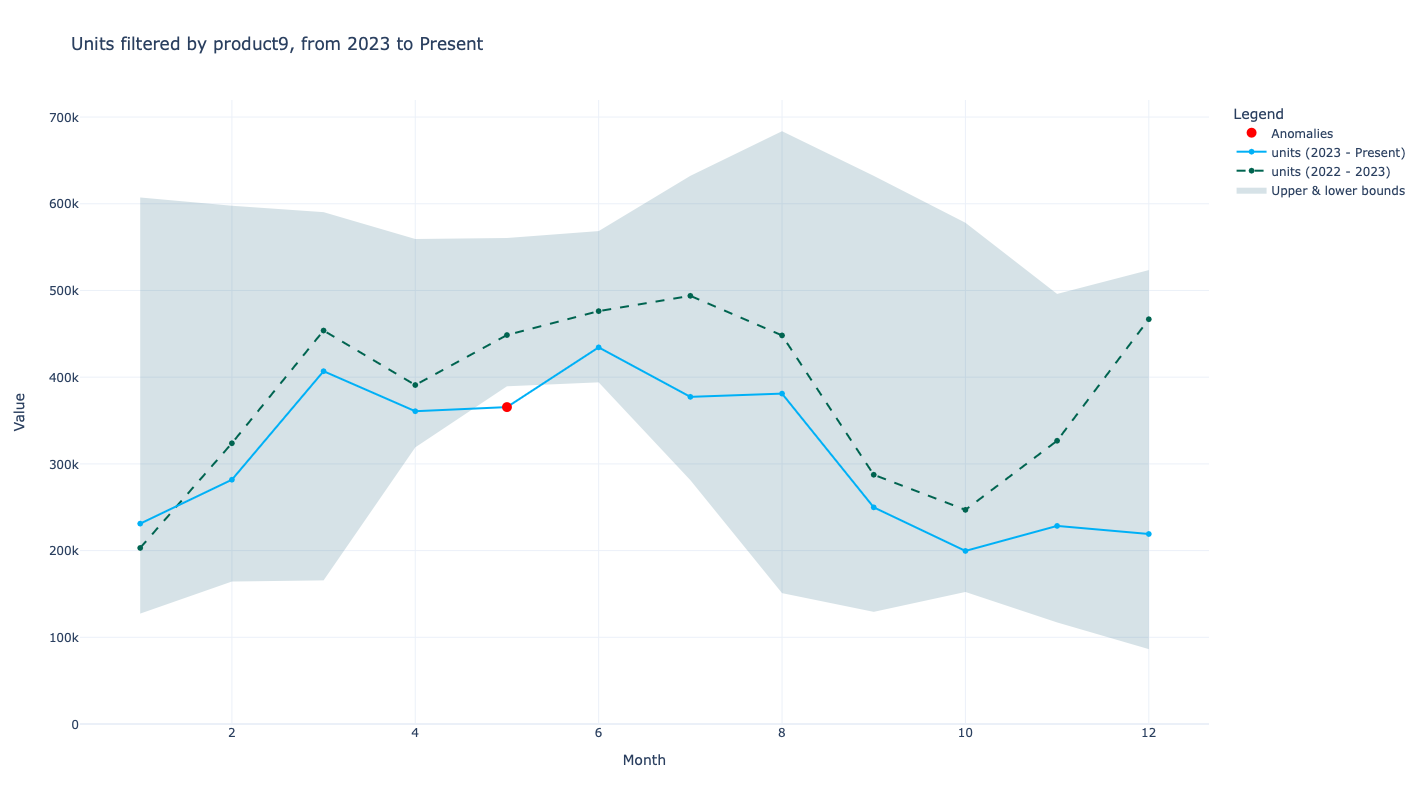

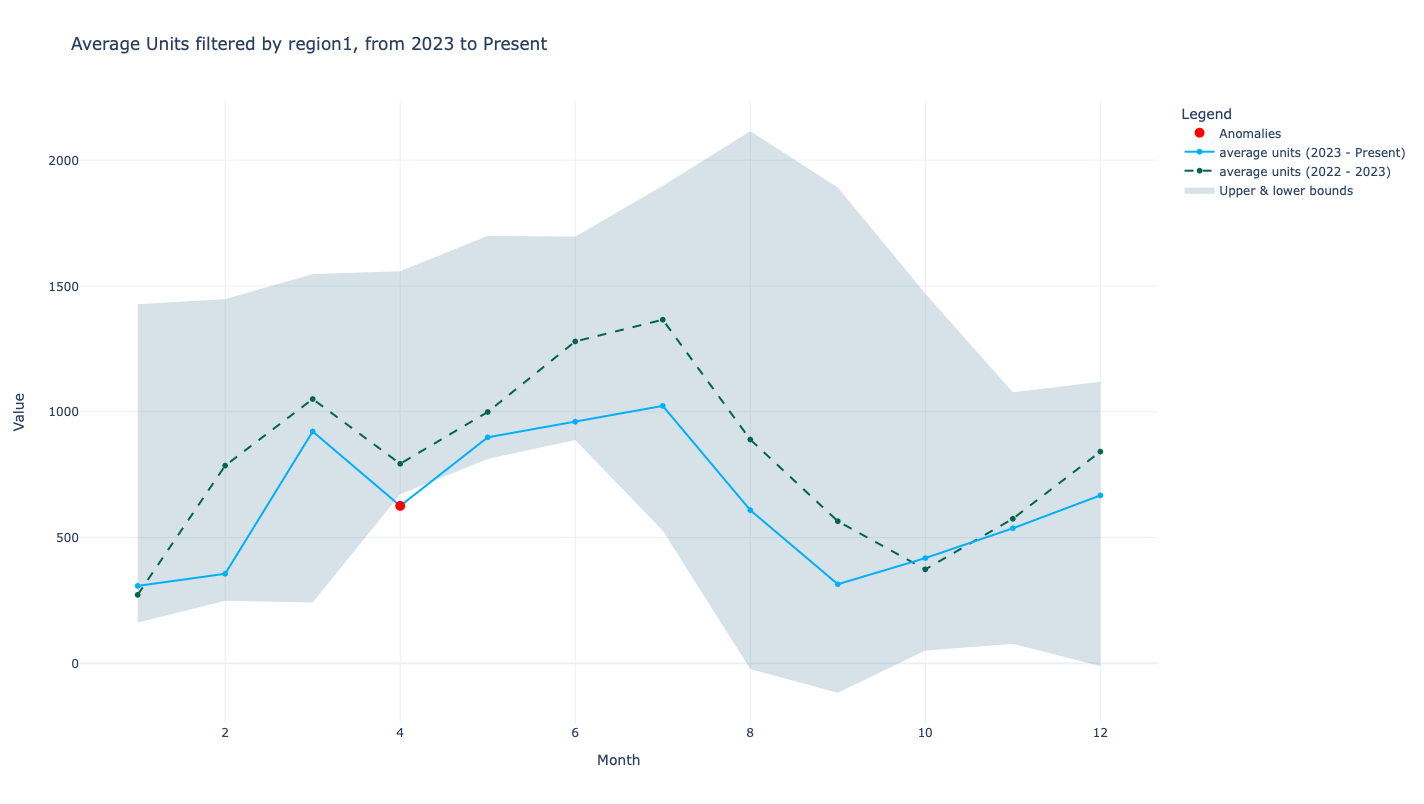

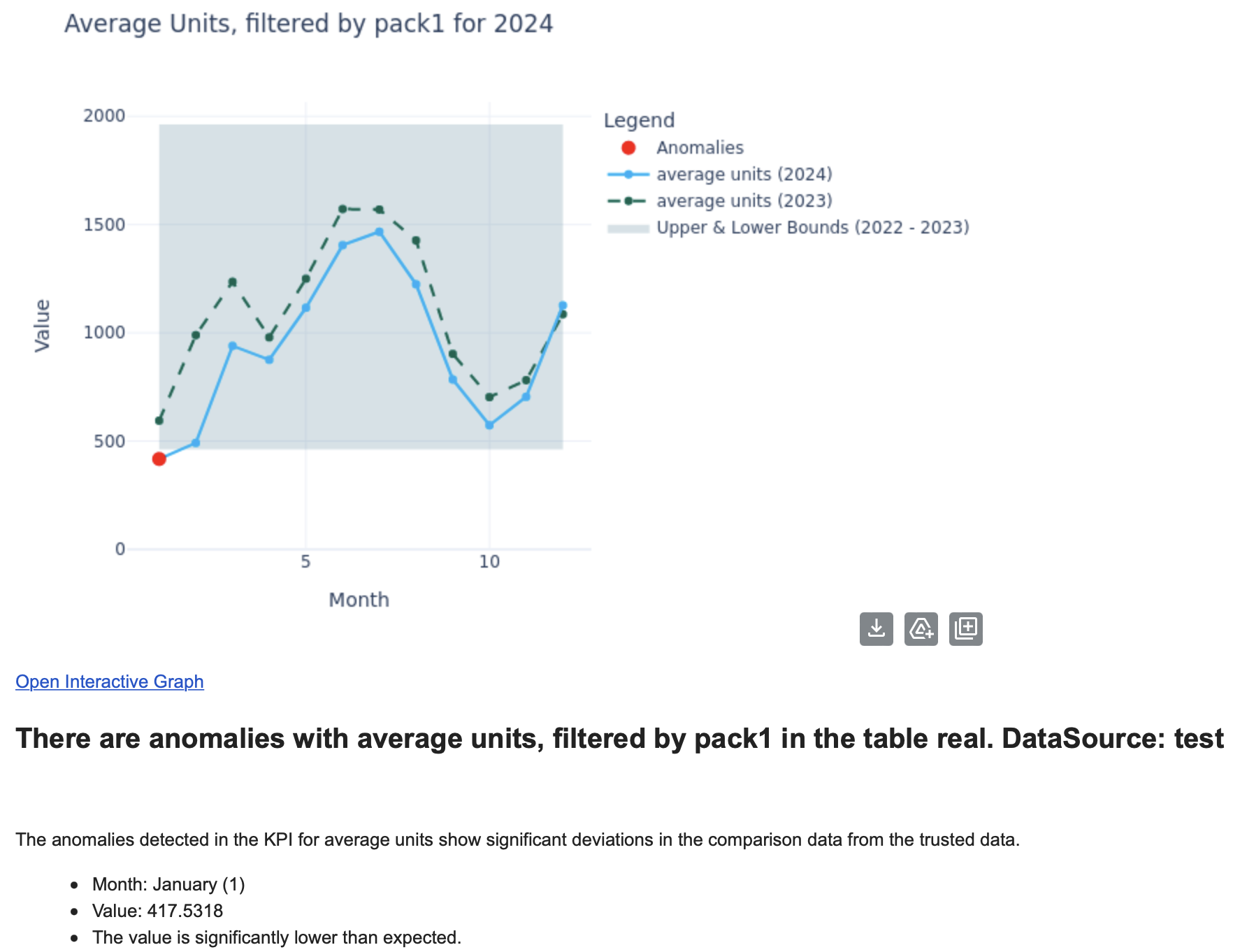

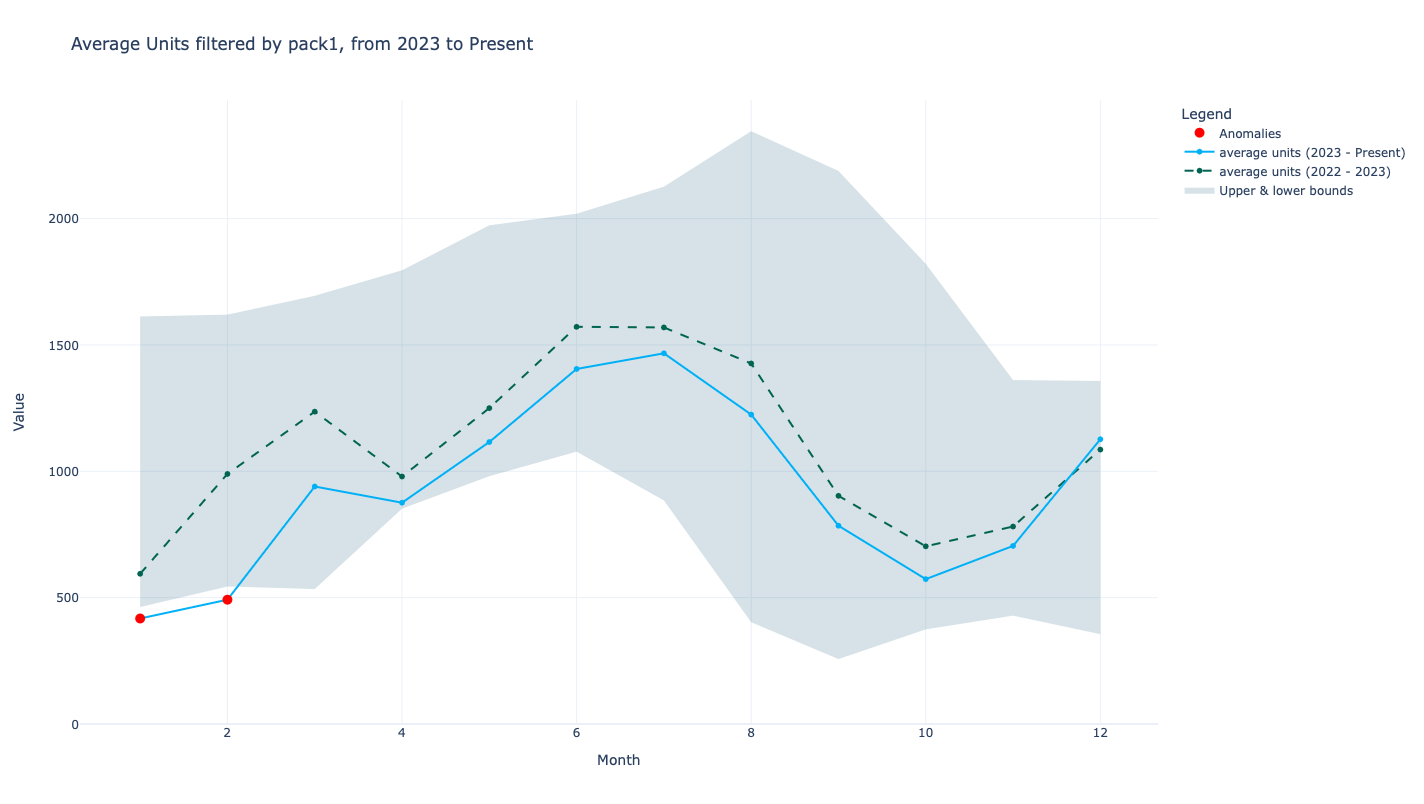

Stay Ahead of the Curve with AI-Powered Data Analytics and Anomaly Detection

In today’s fast-paced business environment, staying ahead of the curve is essential for success. One way to achieve this is by leveraging the power of AI-driven data analytics and anomaly detection. At NLSQL, we are committed to helping businesses harness the power of AI to make better-informed decisions, and we are excited to announce the launch of our new standalone product, AI Anomaly Detector.

Employees often face the daunting task of finding valuable insights hidden within vast amounts of data, akin to finding a needle in a haystack. This challenge can be overwhelming and time-consuming, especially when anomalies are not immediately obvious. To address this challenge head-on, we developed AI Anomaly Detector, a powerful tool that works alongside our existing AI Data Analytics offerings.

AI Anomaly Detector: Proactively Identifying Data Anomalies AI Anomaly Detector is designed to perform regular anomaly checks on your data. Upon detecting any anomaly, it notifies …

Elaj Group and Al Borg Laboratories Innovation Day in Saudi Arabia ??

NLSQL visited Ryadh for a personal negotiation and public presentation of NLSQL Healthcare BI software for Board Members of Al Borg Diagnostics Laboratories and Elaj Group in Saudi Arabia.

Digital transformation with innovative technology adoption is a key part of Saudi Vision 2030.

Saudi Arabia Government strategy leads to Middle East region leadership in technology and non-oil industry revenue share growth. As part of this program, Elaj Group reviewed and invited the best innovation technology company, which will create a high impact on the main industries in upcoming years.

An offline event with 4 of the most promising companies was held in Riyadh, Saudi Arabia.

Denis from NLSQL had a chance to participate in both P2P negotiations and offline public technology presentations. NLSQL adoption in the Middle East market will improve operational excellence and bring even more insights for all organisations levels in real-time. This will provide Saudi Arabia companies …